PROVIDENCE, R.I. [Brown University] — Aphasia, an impairment in speaking and understanding language after a stroke, is frustrating both for victims and their loved ones. In two talks Saturday, Feb. 16, 2013, at the conference of the American Association for the Advancement of Science in Boston, Sheila Blumstein, the Albert D. Mead Professor of Cognitive, Linguistic, and Psychological Sciences at Brown University, will describe how she has been translating decades of brain science research into a potential therapy for improving speech production in these patients. Blumstein will speak at a news briefing at 10 a.m. and at a symposium at 3 p.m.

About 80,000 people develop aphasia each year in the United States alone. Nearly all of these individuals have difficulty speaking. For example, some patients (nonfluent aphasics) have trouble producing sounds clearly, making it frustrating for them to speak and difficult for them to be understood. Other patients (fluent aphasics) may select the wrong sound in a word or mix up the order of the sounds. In the latter case, “kitchen” can become “chicken.” Blumstein’s idea is to use guided speech to help people who have suffered stroke-related brain damage to rebuild their neural speech infrastructure.

Blumstein has been studying aphasia and the neural basis of language her whole career. She uses brain imaging, acoustic analysis, and other lab-based techniques to study how the brain maps sound to meaning and meaning to sound.

What Blumstein and other scientists believe is that the brain organizes words into networks, linked both by similarity of meaning and similarity of sound. To say “pear,” a speaker will also activate other competing words like “apple” (which competes in meaning) and “bear”(which competes in sound). Despite this competition, normal speakers are able to select the correct word.

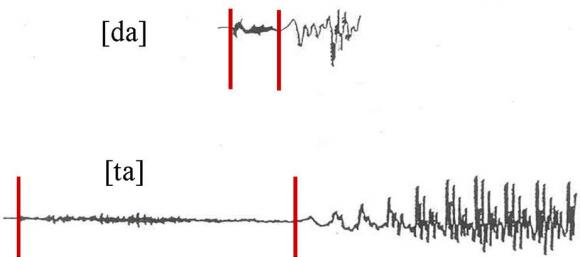

In a study published in the Journal of Cognitive Neuroscience in 2010, for example, she and her co-authors used functional magnetic resonance imaging to track neural activation patterns in the brains of 18 healthy volunteers as they spoke English words that had similar sounding “competitors” (“cape” and “gape” differ subtly in the first consonant by voicing, i.e. the timing of the onset of vocal cord vibration). Volunteers also spoke words without similar sounding competitors (“cake” has no voiced competitor in English; gake is not a word). What the researchers found is that neural activation within a network of brain regions was modulated differently when subjects said words that had competitors versus words that did not.

One way this competition-mediated difference is apparent in speech production is that words with competitors are produced differently from words that do not have competitors. For example, the voicing of the “t” in “tot” (with a voiced competitor ‘dot’) is produced with more voicing than the “t” in “top” (there is no ‘dop’ in English). Through acoustic analysis of the speech of people with aphasia, Blumstein has shown that this difference persists, suggesting that their word networks are still largely intact.

An experimental therapy

The therapy Blumstein has begun testing takes advantage of what she and colleagues have learned about these networks.

“We believe that although the network infrastructure is relatively spared in aphasia, the word representations themselves aren’t as strongly activated as they are in normal subjects, leading to speech production impairments,” she said. “Our goal is to strengthen these word representations. In doing so it should not only improve production of trained words, but also have a cascading effect and strengthen the representations of words that are part of that word’s network.”

Much like physical therapy seeks to restore movement by guiding a patient through particularly crucial motions, Blumstein’s therapy is designed to restrengthen how the brain accesses its network to produce words by engaging patients in a series of carefully designed utterances.

“We hope to build up the representation of that word and at the same time influence its whole network,” she said.

Overall the therapy is designed to last 10 weeks with two sessions a week. In one step of the regimen, a classic technique, a therapist will ask patients to repeat certain training words with a deliberate, melodic intonation. The next session the therapy would repeat those words without the chant-like tone.

“Having to produce words under different speaking conditions shapes and strengthens underlying word representations,” said Blumstein, who is affiliated with the Brown Institute for Brain Science.

Confronting the delicate distinctions of words in the network head-on, Blumstein asks patients to say words that sound similar. “Pear” and “Bear” for example. Explicitly saying similar words, Blumstein said, requires that their differences be accentuated, thus helping strengthen the brain’s ability to distinguish them.

Finally the therapy builds upon these earlier exercises by encouraging patients to repeat words they have not been practicing. A patient’s ability to correctly repeat untrained words is an important test of whether the therapy can generalize to the broader networks of the training words.

In early testing with four patients, two fluent and two nonfluent, Blumstein said she has seen good results. After only two proof-of-concept sessions — one week’s worth of training — three of four patients showed improved precision in producing similar sounding trained and untrained words, as measured via computer-assisted acoustic analysis. The patients also produced fewer speaking errors and had to try fewer times to say what they were supposed to.

If the therapy proves successful, Blumstein said, one effect she’ll be watching for is whether it allows people with aphasia to speak more, not just more accurately. That would represent the fullest restoration of expression and language communication.