PROVIDENCE, R.I. [Brown University] — Men are red. Women are green.

Michael J. Tarr, a Brown University scientist, and graduate student Adrian Nestor have discovered this color difference in an analysis of dozens of faces. They determined that men tend to have more reddish skin and greenish skin is more common for women.

The finding has important implications in cognitive science research, such as the study of face perception. But the information also has a number of potential industry or consumer applications in areas such as facial recognition technology, advertising, and studies of how and why women apply makeup.

“Color information is very robust and useful for telling a man from a woman,” said Tarr, the Sidney A. and Dorothea Doctors Fox Professor of Ophthalmology and Visual Sciences and professor of cognitive and linguistic sciences at Brown. “It’s a demonstration that color can be useful in visual object recognition.”

Tarr said the idea that color may help us to identify objects better has been controversial. But, he said, his and related findings show that color can nonetheless provide useful information.

Tarr and Nestor’s research is reported in the journal Psychological Science. The paper will be published online Dec. 8 and in print a few weeks later.

To conduct the study, Tarr needed plenty of faces. His lab analyzed about 200 images of Caucasian male and female faces (100 of each gender) compiled in a data bank at the Max Planck Institute in Tübingen, Germany, photographed using a 3-D scanner under the same lighting conditions and with no makeup. He then used a MatLab program to analyze the amount of red and green pigment in the faces.

Additionally, Tarr and his lab relied on a large number of other faces photographed under similar controlled conditions. (Tarr has made them available on his web site, www.tarrlab.org.)

What he found: Men proved to have more red in their faces and women have more green, contrary to prior assumptions.

“If it is on the more red end of the spectrum (the face) had a higher probability of being male. Conversely, if it is on the green end of the spectrum (the face) had a higher probability of being female,” Tarr said.

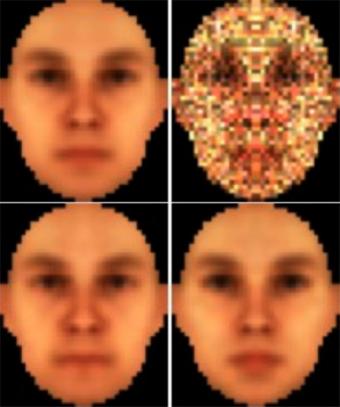

To test the concept further, Nestor and Tarr used an androgynous image compiled from the average of the 200 initial faces. Trial by trial, they randomly clouded the face with “visual noise” that either included more red or green. The “noise” was not unlike static that can appear on a television screen with no signal.

Subjects were then asked to decide on the gender of the image, using nothing more than the random shape and color patterns over the sexually ambiguous face as a guide. Tarr describes the effect as a “superstitious hallucination,” similar to being in the shower and hearing the doorbell or telephone even when neither rings.

Three Brown University students participated in the experiment for pay, and they all had normal or corrected vision with no color blindness. Each observer handled about 20,000 trials spread across 10 one-hour sessions.

Once the study was complete, the images identified by subjects as male or female were divided into two piles according to gender.

Each pile of images was then analyzed to determine the average color content across various locations in the images.

Across much of both sets of face images, Nestor and Tarr found that the “male” piles were redder and the “female” piles greener.

Such differences are not absolute — some women’s faces are much redder and some men's faces are much greener — but overall, across this and related studies, Tarr has determined that observers use the color of a face when trying to identify its gender. That is particularly true when the shape of the given face is ambiguous or hidden.

Another study found, for example, that observers are quite sensitive to the color of faces when the facial images are blurred to the point where the face shape is almost impossible to see.

Ongoing funding from the National Science Foundation and the Temporal Dynamics of Learning Center, which the NSF also funds, supported the study.