PROVIDENCE, R.I. [Brown University] — The motion we perceive with our eyes plays a critical role in guiding our feet as we move through the world, Brown University research shows.

The work was conducted in Brown’s Virtual Environment Navigation Lab, or VENLab, one of the largest virtual reality labs in the country. It appears online in Current Biology and will be featured on the journal’s Dec. 4, 2007, cover. The findings shed important new light on the phenomenon of optic flow, the perceived motion of visual objects that helps in judging distance and avoiding obstacles while walking, driving and even landing a plane.

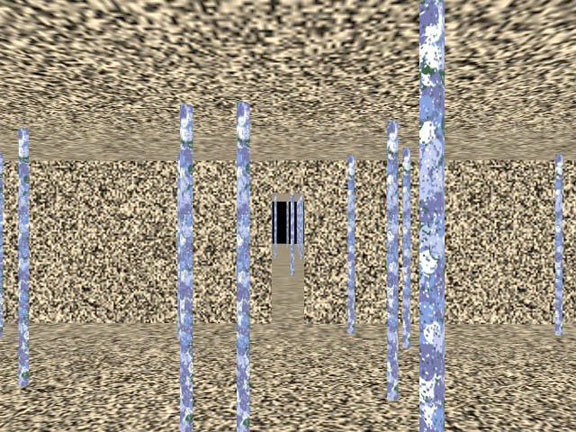

Perception in action, or the intersection of how we see and how we move, is the focus of research in the laboratory of William Warren, chairman of Brown’s Department of Cognitive and Linguistic Sciences. In the VENLab, Warren and his team have studied optic flow by having human subjects wear virtual reality goggles and navigate through virtual obstacles in the darkened room.

In 2001, the Warren lab showed for the first time that people use optic flow to help them steer toward a target as they walk. But what happens when you are standing still? If you are not walking and not getting the constant stream of visual information that optic flow provides, how do you begin moving toward a target? How do you know which way to direct your first step?

To get an answer to this question, the team created a virtual display that simulates a prism, bending light so that the visual scene shifts to one side. The target – in this case, a virtual doorway toward which subjects were told to walk – appeared to be farther to the right than it actually was. A total of 40 subjects ran through about 40 trials each, with everyone trying to walk through the virtual doorway while wearing the simulated prism. Half those subjects had optic flow, or a steady stream of visual information, available to them. The other half did not.

The researchers found that, on average, all 40 subjects missed the doorway by about five feet on the first few steps. But after a while, subjects adapted and were able to walk straight toward the doorway. Then the simulated prism was removed, and subjects were again asked to walk to the virtual doorway. Surprisingly, they all missed their mark on the opposite side because their brains and bodies had adapted to the prism. After a few tries, subjects quickly readjusted again and were able to walk straight to the doorway.

Hugo Bruggeman, a postdoctoral research fellow in the Warren lab who led the research, said the kicker came when they compared data from subjects who had optic flow available during the trials with data from those who did not. When subjects had optic flow, they took a straight path toward the doorway and made it, on average, in just three tries. When optic flow was eliminated, and subjects had only a lone target to aim for, it took an average of 20 tries before they walked straight to the target.

“With optic flow, it is easier to walk in the right direction,” Bruggeman said. “Subjects adapted seven times faster. This suggests that with a continuous flow of visual information, your brain allows you to rapidly and accurately adapt your direction of walking. So we’re constantly recalibrating our movements and our actions based on information such as optic flow.”

This finding could have practical applications, particularly in robotics, where it can be used to produce machines with more accurate guidance capabilities. But Warren said the real value of the work rests with the deeper scientific principle it points up.

“We tend to think that the structures of the brain dictate how we perceive and act in the world,” Warren said. “But this work shows that the world also influences the structures of the brain. It’s a loop. The organization in the brain must reflect the organization and information in the world. And this is a universal principle. All animals that move use optic flow to navigate. So whether you’re a fly landing on a leaf or a pilot landing on a runway, you may be using the same source of sensory information to carry out your task.”

Wendy Zosh, a graduate student in the Department of Cognitive and Linguistic Sciences, also assisted in the research.

The National Eye Institute funded the work.